General Qualifications

How the Interior Architecture and Design Program at Utah State University used RM Compare to improve admissions

Case Study | Caine School of the Arts vs. College

Background

The Department of Art and Design at Utah State University is a dynamic community dedicated to excellence in visual art, design, and scholarship. Recognised for its nationally accredited programs, the department combines rigorous foundational study, creative innovation, and professional skill development within a supportive environment.

Introduction

The Interior Architecture and Design program at Utah State University (USU) is an acclaimed and competitive four-year Bachelor’s degree, recognised as the first in Utah to earn Council for Interior Design Accreditation (CIDA). Students complete 120 semester credits and undergo rigorous portfolio reviews in their first and second years to progress in the program.

The challenge

Recruiting fairly for this highly competitive course through traditional assessment techniques presents significant challenges related to bias, reliability, and validity. Not getting this could produce several negative consequences that can undermine both fairness and institutional quality.

Reduced fairness and increased subjectivity

Lower validity and creativity suppression

Compromised reputation and outcomes

Increased administrative burden

Stagnation in assessment innovation

Reduced fairness and increased subjectivity

- Traditional marking systems often amplify personal bias and subjective interpretation, as assessors rely on their own preferences or interpretations of vague rubrics. This can result in inconsistent, unreliable decisions and unfairly disadvantage some applicants.

- Candidates from non-traditional backgrounds or with unconventional portfolios may be excluded, reducing access and diversity within the program.

Lower validity and creativity suppression

- Analytical, “tick-box” approaches fragment portfolios into isolated criteria, missing the holistic, aesthetic, and innovative qualities Caine College values in creative disciplines. As a result, portfolios that demonstrate true creative potential, originality, or unconventional approaches risk being overlooked in favour of formulaic submissions.

- Faculty may become dissatisfied with assessment processes that do not reflect their professional judgment or the complexity of artistic work, leading to disengagement.

Compromised reputation and outcomes

- Persistent bias and lack of reliability can damage Caine College’s reputation for equity, integrity, and excellence in creative arts education.

- Students selected through flawed traditional assessments may not represent the full spectrum of talent, potentially impacting future league tables, graduate outcomes, and program prestige.

Increased administrative burden

- Traditional assessments require extensive time, standardisation, and moderation without guaranteeing fairer or more reliable results.

- Scaling admissions processes as program demand grows becomes increasingly difficult with face-to-face interviews and labour-intensive portforeviews.

Stagnation in assessment innovation

- By not embracing more dynamic and evidence-based methods like RM Compare, the college risks missing out on the benefits of emerging best practices in creative assessment, hindering its ability to lead in educational innovation.

Summary

Sticking with traditional assessment methods creates risk of bias, reduced creativity, inefficiency, and reputational harm-whereas Comparative Judgement with RM Compare delivers greater fairness, validity, and alignment with the values of art and design education.

The alternative

Comparative Judgement using RM Compare is a superior approach for allocating places in competitive courses like USU’s Interior Architecture and Design program, especially when assessing creative portfolios. Unlike traditional assessment methods that rely on marking schemes or numeric grades-often ill-suited for subjective, open-ended work-Comparative Judgement harnesses the collective expertise of multiple assessors to make direct side-by-side comparisons between candidate submissions. This results in several key benefits:

Increased reliability and objectivity

Greater validity and breadth

Efficiency and scalability

Enhanced feedback and engagement

Increased reliability and objectivity

- Each portfolio is judged multiple times by different assessors, reducing the influence of individual bias and “rogue” judgments, leading to consistently high reliability.

- Anonymised assessment further mitigates unconscious bias, ensuring decisions are based solely on portfolio quality rather than candidate identity.

Greater validity and breadth

- The approach frees assessors from restrictive rubrics, allowing them to holistically evaluate a candidate’s true level of ability and creativity across diverse media, which is essential in design disciplines.

- Comparative Judgement captures a broader range of skills and innovation, supporting a more valid assessment of talent.

Efficiency and scalability

- The process is faster than traditional grading; assessors quickly identify which work is better in a series of paired comparisons, and an algorithm efficiently computes a reliable rank order.

- There’s less need for time-consuming standardisation meetings, as consensus is built through the judging process itself.

Enhanced feedback and engagement

- Candidates and faculty gain clearer feedback on strengths and areas for improvement through relative ranking, not just scores.

- The process supports reflective practice among assessors, deepening their understanding of standards and quality.

Summary

In summary, Comparative Judgement via RM Compare delivers a more fair, valid, reliable, and efficient way to allocate places in creative, competitive programmes by focusing on authentic, expert-led comparison rather than rigid marking schemes.

The comparative approach

Candidates were asked to submit multi-page design portfolio including a series of projects inspired by Eero Saarinen’s TWA flight centre at New York’s JFK airport, widely regarded as a masterpiece of modern architecture.

The design brief sought to encourage diverse responses from candidates to allow them to fully demonstrate their approach, design skills and potential. In total, 67 portfolios were submitted which were anonymised and added to an RM Compare session.

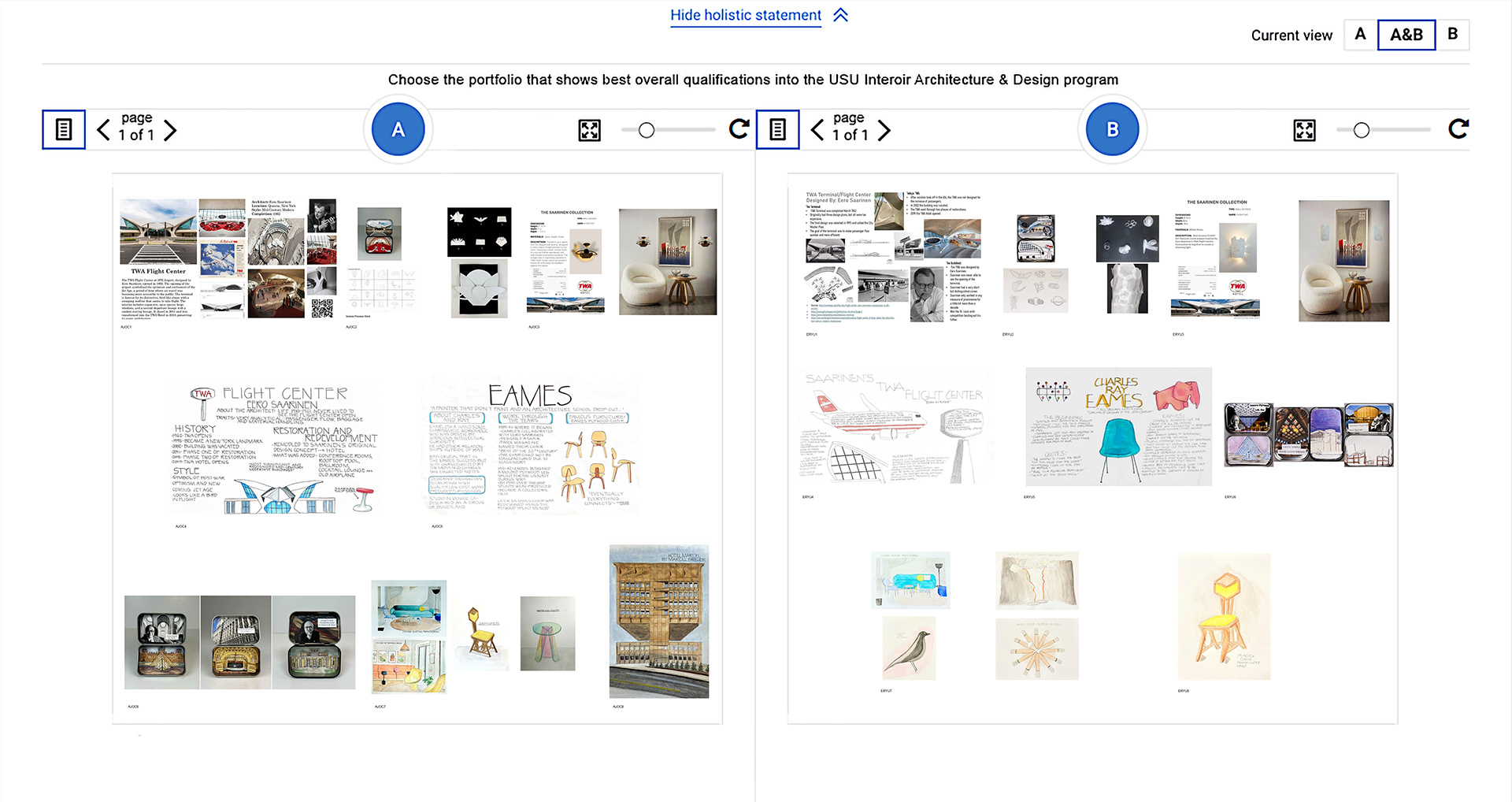

A panel of 18 (four faculty and invited alumni) judges was added to the session. The alumni group was spread across multiple states nationwide. Each judge was asked to complete around 37 judgments - you can see the judge’s view below.

When making their judgements they were asked to consider the holistic statement only, which in this case was - “Choose the portfolio that shows the best overall qualifications into the USU Interior Architecture & Design program.”

In total 646 judgements were made with each portfolio being seen 19 times.

The average decision time was 76 seconds with all judges completing their 37 allocated judgements in under an hour (so 18 hours total work).

The outcome

We can see from The rank order (Figure 2) how the session achieved very high levels of reliability (0.83). Interestingly, we can see that in this case a stable, reliable rank was achieved after each portfolio had been seen around 12 times (round 12 = reliability 0.83) which could further reduce workload in future sessions.

Figure 2: The rank order

Figure 2: The rank order

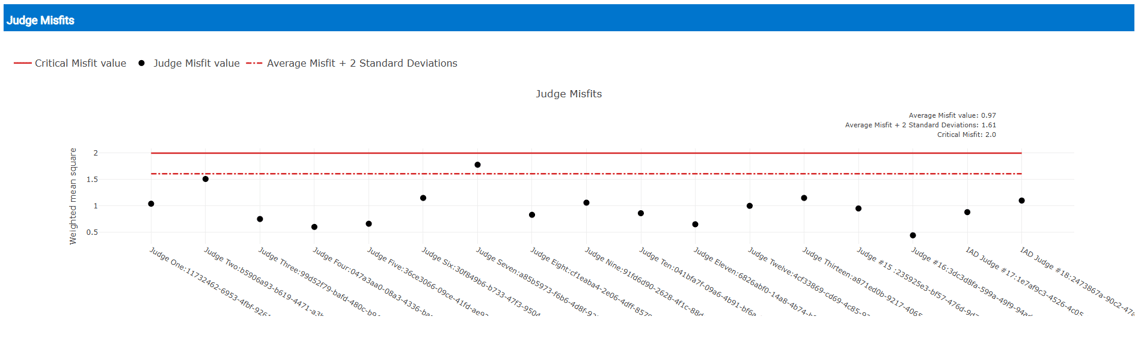

In the Judge misfit chart (Figure 3), we can see how there was a very high level of agreement between the judges on the quality of the portfolios presented. That is to say, there was no judge who had a radically different perception of quality.

Figure 3: Judge misfit

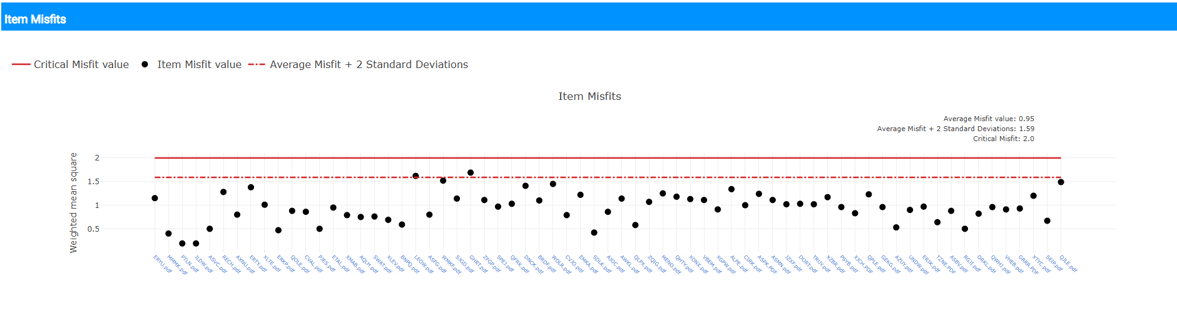

In the Item misfit chart (Figure 4), we can see that all of the items were presented in a way that made them comparable by the judges – that is to say there were none that were ambiguous or presented in a way that made them hard to compare.

Figure 4: Item misfit

Key tests

Increased reliability and objectivity |

The Session reliability of 0.87 was extremely high, and much better than could be expected from traditional assessment methods. Noise and bias were dramatically reduced. | ||

Greater validity and breadth |

Candidates were able to express themselves with a high degree of freedom and were able to submit more authentic portfolios as a result, which better illustrated their strengths. | ||

Efficiency and scalability |

The total time of 18 hours for 67 portfolios (16 minutes per portfolio), with no need for additional standardisation meetings, was considerably more efficient that traditional approaches. |

||

Enhanced feedback and engagement |

Judges values to opportunity to look across the breadth of submissions. The process itself helped to develop tacit knowledge around quality (what good looks like), increasing confidence. |

Summary - trust and fairness

The Department of Art and Design uses comparative assessment because it is a fairer and more trustworthy way to judge creative portfolios. Instead of relying on a single marker or rigid criteria, multiple expert assessors compare each candidate’s work directly with others, anonymously and several times. This means decisions reflect a broad expert consensus rather than one person’s opinion, reducing bias and giving every applicant an equal chance whatever their style or background. Comparative assessment ensures true creative talent stands out, so admissions decisions can be made confidently and transparently.

The future

Adaptive Comparative Judgement with RM Compare has firmly established itself as the best method to fairly assess and select applicants into the Interior Design and Architecture course at the Utah State University. Such has been its success that Professor Darrin Brooks, the course leader, is now sharing the benefits of the tool deeper into the university community and beyond.

Try RM Compare

Interested in finding out more?

Contact us for a conversationFind out more

Interested in finding out more?

Learn more about RM’s marking solutions:

https://www.rm.com/assessment/collect-collate-and-mark

Contact us for a conversation